AI-enabled insurance claim checks tool

In its third year of existence, Napo had a robust internal UX for claim handling. However, as the company continued to grow, average human assessment speed remained at 1.2 claims per hour.

The company started developing its own AI-powered claim assessment tool. My goal as a UX designer was to create a new tool that enables assessors to check and verify AI assessments.

Headquarters

London, UK

Founded

2021

Industry

Pet Insurance

Revenue

TBC

Company size

50-100 people

Challenge

In 2023, the assessment process consisted of around 40 checks, and involved up to 5 specialists. The business wanted to increase the speed of assessments tenfold. My mission was to design a tool for the essential checks to be performed by relevant specialists with highest accuracy.

Results

I found that there were just 6 checks that actually defined whether a claim would be accepted. I created an interface that would highlight low-confidence claims to assessors, and have them focus solely on the one impactful check. This also allowed us to train the AI by learning from the assessors' reasoning.

x10

Assessment speed gain

97%

Human decision accuracy

40%

Claims fully automated

Process

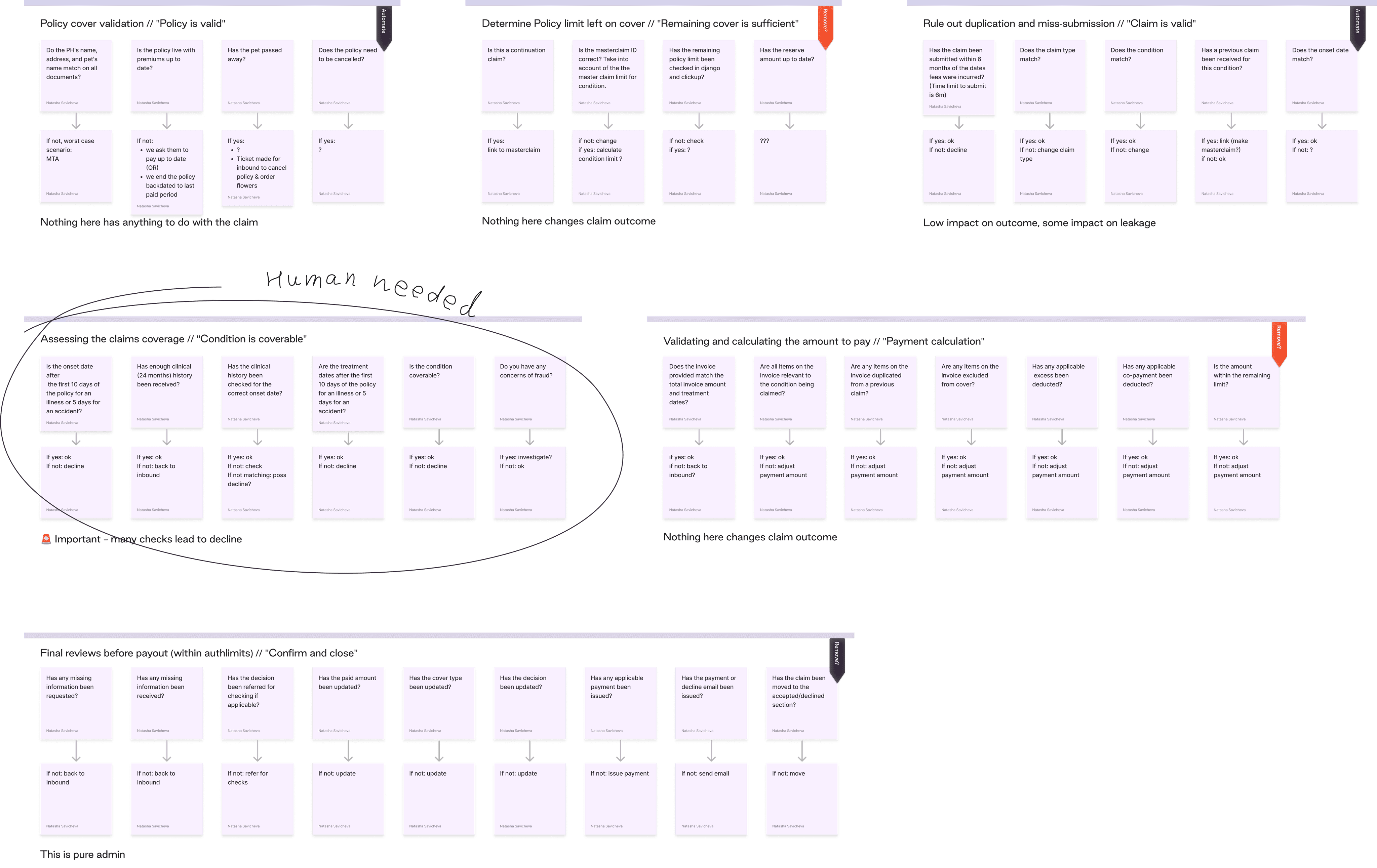

Problem definition: this project came with a very wide scope. First, I sorted checks from trivial to essential and put them in buckets: 'Policy', 'Remaining cover', 'Claim', 'Condition', 'Payment'. Then I ran a session with Customer Operations to understand which checks actually influence the outcome, which can be automated, and which can be eliminated as excessive. This reduced my scope to just 6 checks in the "Condition' bucket.

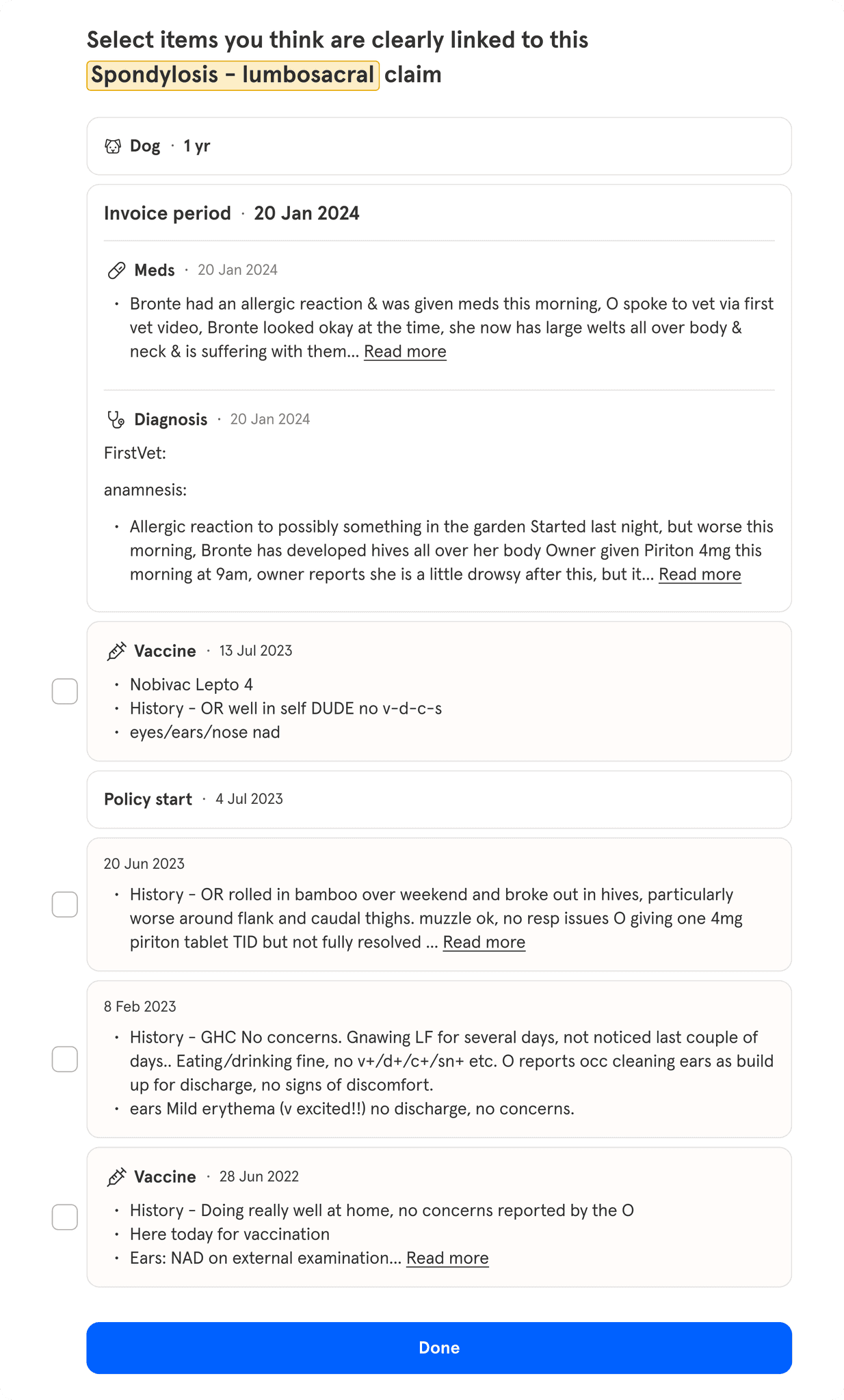

Information Architecture: I had to strike the right balance: give assessors enough context for accurate judgements, but not so much that they get sidetracked by other issues. I decided to start from essential info only and build it up based on feedback.

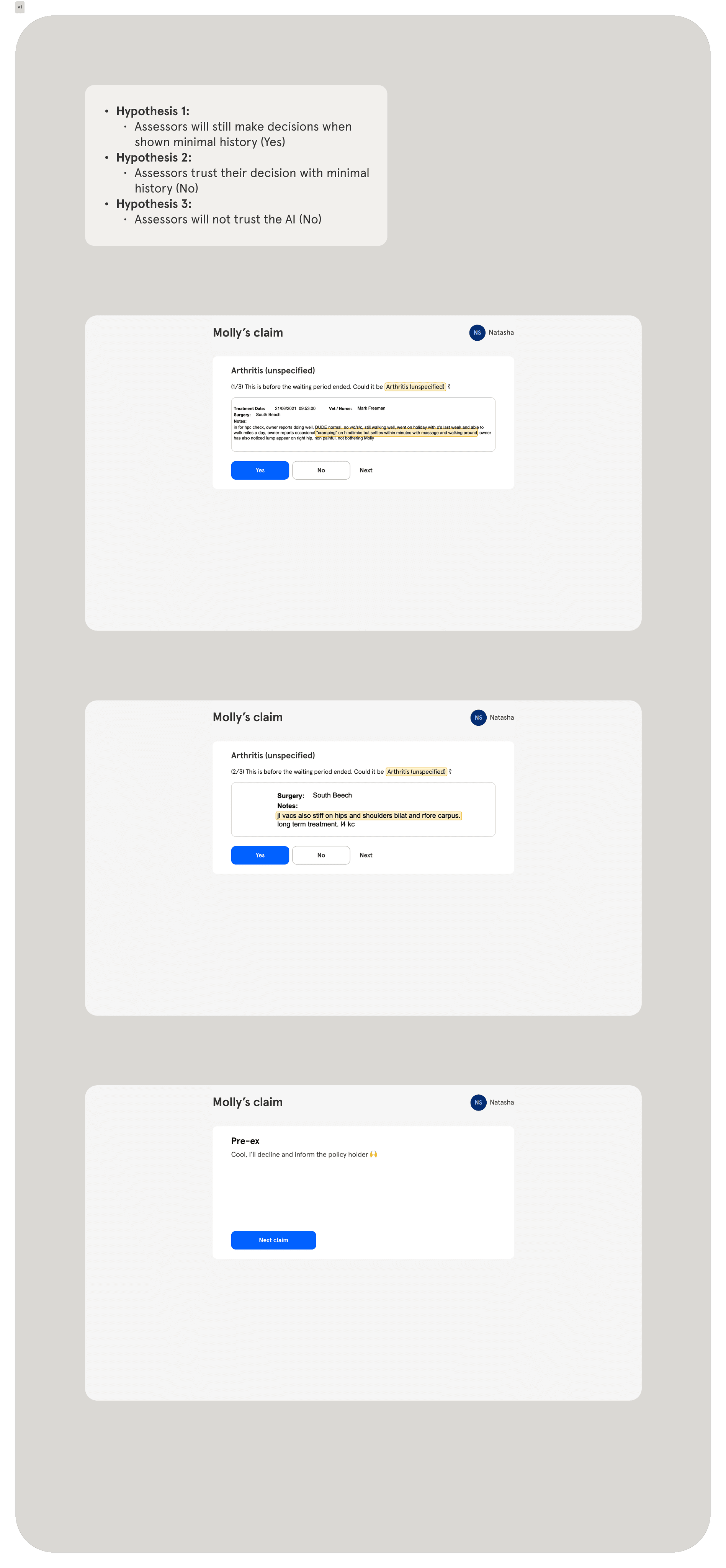

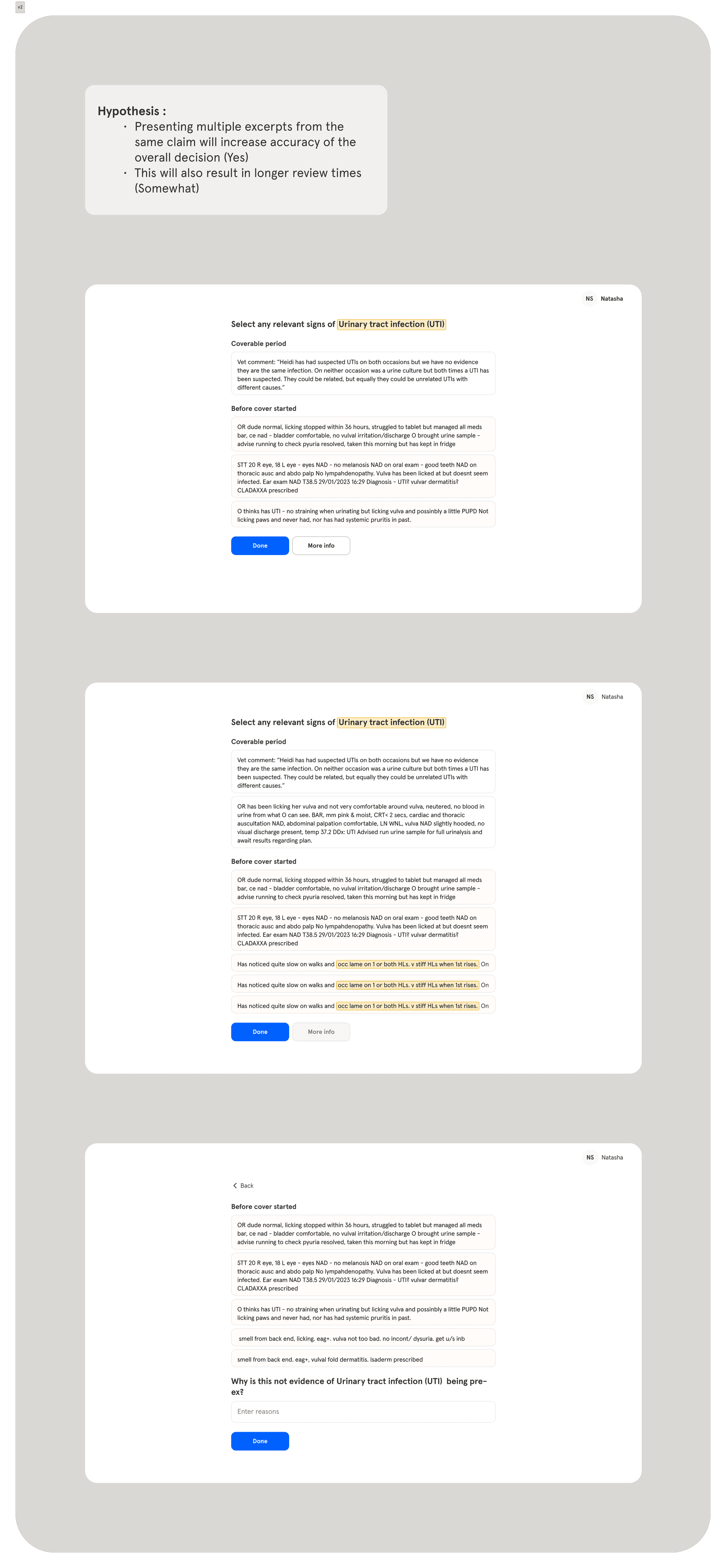

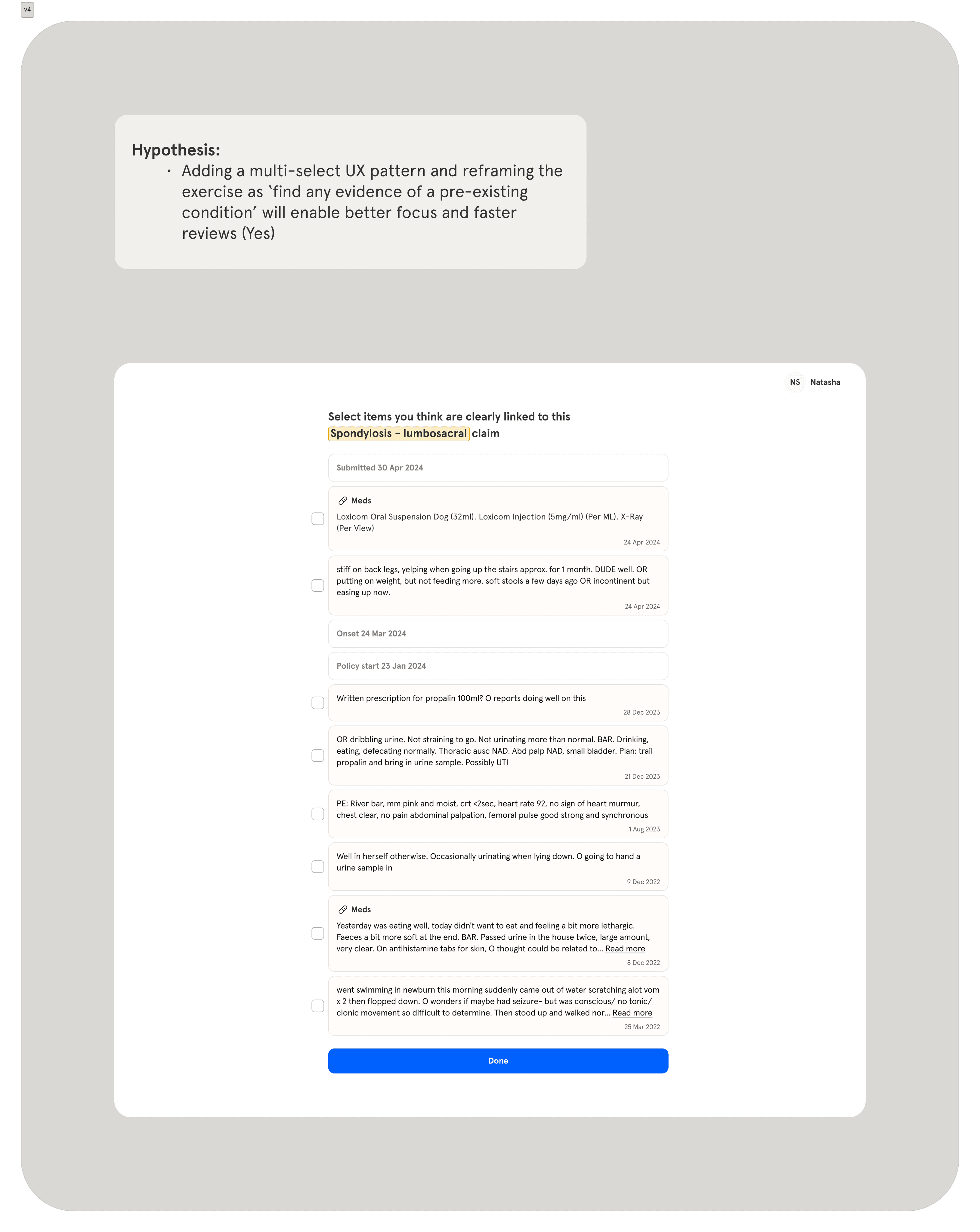

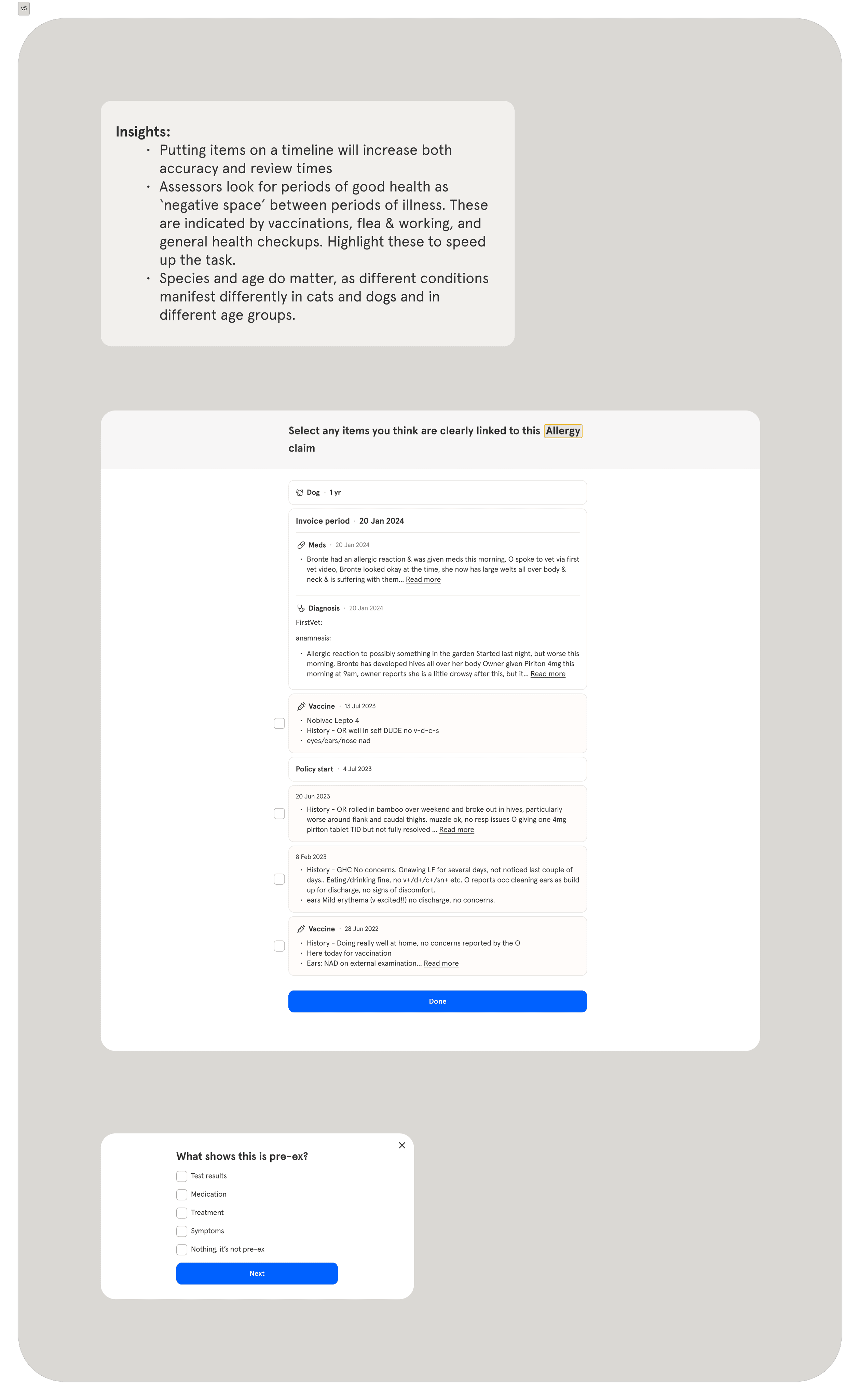

Prototyping & iterating : In the first iteration, the Checks tool contained a bare minimum of claim info. We tested it with three Senior Assessors, using real claim data. I decided that whenever they said that something was missing, I'd probe further, and if there was a good rationale, I'd add that on. This way I ended up adding a timeline, highlighting key symptoms, vaccinations and meds, and noting key policy dates.

Proof of concept: The final iteration was tested again on 15 claims with two leading assessors, and they both got 97% of claim decisions right (cross checked by each other with full claim data). Once we were certain of what's possible, the company made plans for implementation.

“ When implementing cutting-edge LLM workflows for claim assessment, it was very important to us to be able to quickly and efficiently sense-check the output. The Checks Tool not just achieved this, but allowed us to train the model on the assessor's feedback. ”

Ewan Jones

Staff Backend Engineer

Conclusion

Following this iterative, feedback-based approach to UX, I managed to exceed the speed goal, with 3 Senior Assessors managing to assess 12+ claims an hour, each. I also successfully scoped out trivial binary checks that were automated right away. As a result, we got to 97% accuracy in assessors' reviews. This work unblocked end-to-end claim automation.